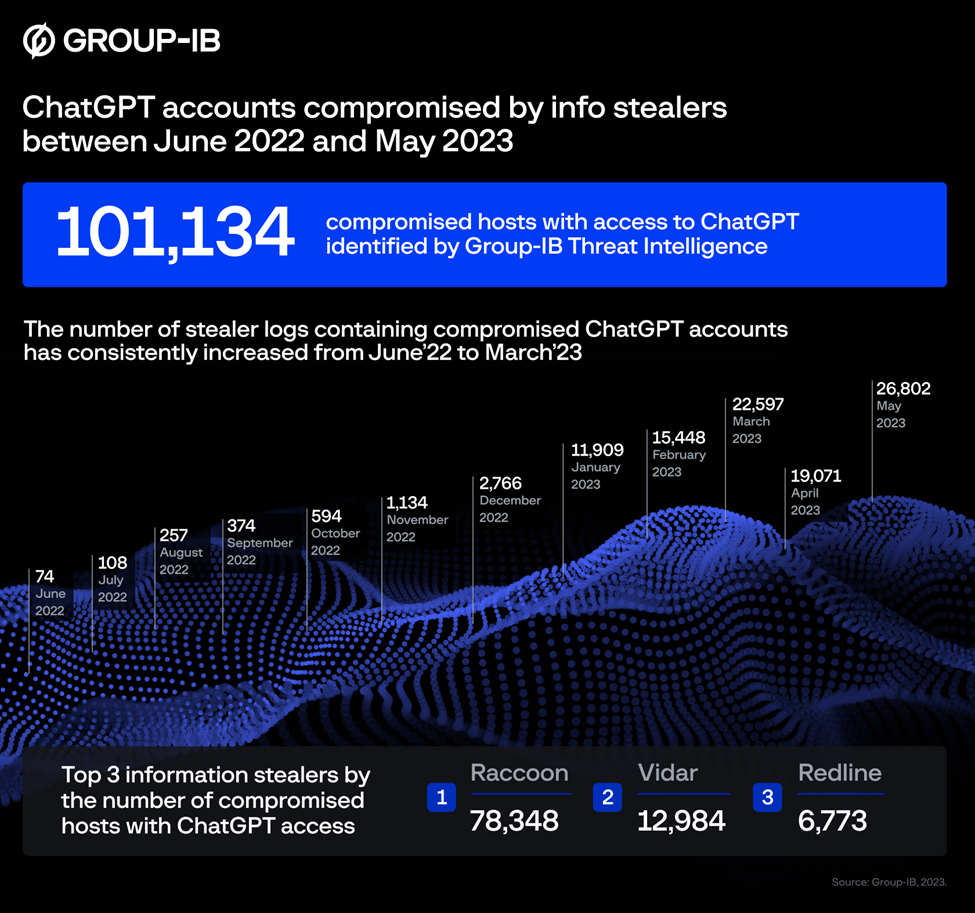

As many as 101,134 ChatGPT accounts have been compromised by hackers and being sold on illicit Dark Web marketplaces in the past year, according to cybersecurity Group-IB today.

These accounts, it revealed, have compromised credentials that are being traded for malicious actors looking to cash in on companies rushing to make use of new artificial intelligence (AI) tools.

The logs containing compromised ChatGPT accounts had reached a peak of 26,802 in May 2023, according to Group-IB’s analysis of underground marketplaces.

The news is a sobering reminder that the seemingly easy-to-use and accessible breakthrough technology comes with risks that many users are still ignorant about.

By stealing access to the accounts of these users, hackers can find out what a company is working on and steal confidential information on its future plans, for example.

In March, Samsung took the drastic step off banning staff’s AI use after spotting a ChatGPT data leak. Employees had uploaded sensitive code to the platform, the Korean electronics giant found.

Even, Google, which is among the frontrunners pushing for more AI use in anything from managing cloud setups to creating new content on its online office productivity tools, has warned its own staff about the risks of using AI chatbots like its own Bard.

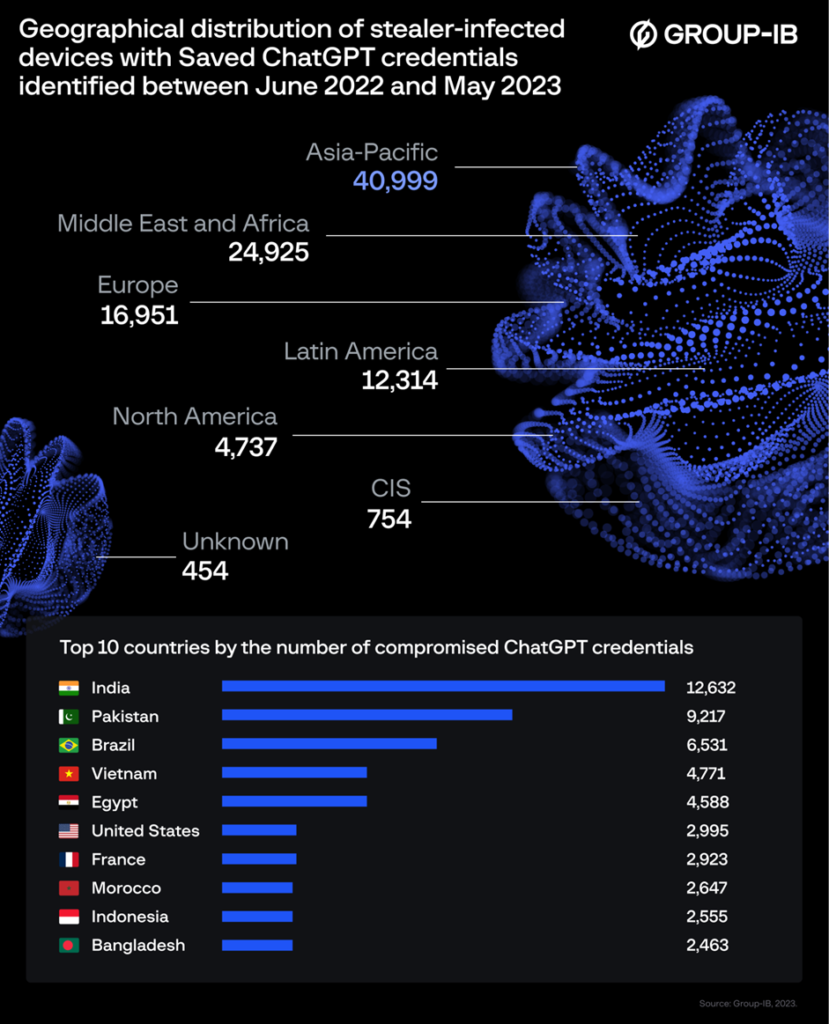

In the Group-IB report, Asia-Pacific is where the most ChatGPT accounts have been stolen, according to what’s being spotted on the Dark Web. Between June 2022 and May 2023, the region accounted for 40.5 per cent of the accounts stolen.

The problem could become more serious as more businesses incorporate AI tools such as ChatGPT into their everyday workflow. Just as seamless as the productivity increases it brings are the risks involved, if one is not careful.

“Employees enter classified correspondences or use the bot to optimise proprietary code,” said Dmitry Shestakov, head of threat intelligence at Group-IB.

“Given that ChatGPT’s standard configuration retains all conversations, this could inadvertently offer a trove of sensitive intelligence to threat actors if they obtain account credentials,” he added.

In an interesting and somewhat ironic disclosure, Group-IB also said its news release of this report that was shared with the media was also written with the assistance of ChatGPT.

It’s a sign of how deeply AI is already embedded in today’s workflow, in just a matter of months since people first heard of ChatGPT. Like with other new technologies, the challenge is in mitigating the risks.

For example, Group-IB advises users to update their passwords regularly and implement two-factor authentication (2FA).

This way users are required to provide an additional verification code, typically sent to their mobile devices, before accessing their ChatGPT accounts, it added.

Like other cybersecurity firms, it also offers threat intelligence to businesses, so they can be alerted of activity in Dark Web communities that are trading stolen credentials.